5.5.3.2.2. cFp_Memtest¶

Note: This HTML section is rendered based on the Markdown file in cFp_Zoo.

cloudFPGA project (cFp) for changing characters case of a user phrase. The purpose of this project is to establish a starting point for a full-stack software developer with a usefull API for common programming languanges and development frameworks.

5.5.3.2.2.1. System configurattion¶

5.5.3.2.2.1.1. Ubuntu¶

Assuming Ubuntu >16.04 the folowing packages should be installed:

sudo apt-get install -y build-essential pkg-config libxml2-dev rename rpl python3-dev python3-venv swig curl

5.5.3.2.2.1.2. CentOS/EL7¶

sudo yum groupinstall 'Development Tools'

sudo yum install cmake

5.5.3.2.2.2. Full-stack software support¶

The following programming languanges are currently supported (and are on the roadmap)

[x] C/C++

[ ] Java

[ ] Python

[ ] Javascript

The following programming frameworks are currently supported (and are on the roadmap)

[ ] Spark

The following socket libraries are currently supported (and are on the roadmap)

[ ] Asio

[ ] ZeroMQ

[ ] WebSockets

The following containerization software is currently supported (and is on the roadmap)

5.5.3.2.2.3. Vivado tool support¶

The versions below are supported by cFp_Memtest. As of today we follow a hybrid development approach

where a specific part of SHELL code is synthesized using Vivado 2017.4, while the rest of the

HLS, Synthesis, P&R and bitgen are carried over with Vivado 2019.x.

5.5.3.2.2.3.1. For the SHELL (cFDK’s code)¶

[x] 2017

[x] 2017.4

[ ] 2018

[ ] 2019

[ ] 2020

5.5.3.2.2.3.2. For the ROLE (user’s code)¶

[ ] 2017

[ ] 2018

[x] 2019

[x] 2019.1

[x] 2019.2

[x] 2020

[x] 2020.1

5.5.3.2.2.3.2.1. Repository and environment setup¶

git clone --recursive-submodules git@github.com:cloudFPGA/cFp_Vitis.git

cd cFp_Vitis

source ./env/setenv.sh

5.5.3.2.2.3.2.2. Memtest Simulation¶

The testbench is offered in two flavors:

HLS TB: The testbench of the C++/RTL. This is a typical Vivado HLS testbench but it includes the testing of Memtest IP when this is wrapped in a cF Themisto Shell.

Host TB: This includes the testing of a a host apllication (C++) that send/receives strings over Ethernet (TCP/UDP) with a cF FPGA. This testbench establishes a socket-based connection with an intermediate listener which further calls the previous testbench. So practically, the 2nd tb is a wrapper of the 1st tb, but passing the I/O data over socket streams. For example this is the

system commandinsideHost TBthat calls theHLS TB:// Calling the actual TB over its typical makefile procedure, but passing the save file string str_command = "cd ../../../../../../ROLE/custom/hls/memtest/ && " + clean_cmd + "\ INPUT_STRING=4096 TEST_NUMBER=2 BURST_SIZE=512 " + exec_cmd + " && \ cd ../../../../../../HOST/custom/memtest/languages/cplusplus/build/ "; const char *command = str_command.c_str(); cout << "Calling TB with command:" << command << endl; system(command);

The Makefile pass as argument to the TB the following params:

INPUT_STRINGis the maxmimum target address (e.d., max 1000000 or 1MB)TEST_NUMBERis the number of repetitions of the testBURST_SIZEis the desired burst size

5.5.3.2.2.3.2.2.1. Simulation example:¶

make csim INPUT_STRING=4096 TEST_NUMBER=2 BURST_SIZE=512

Basic files/modules:

memtest_host.cpp: The end-user application. This is the application that a user can execute on a x86 host and send a string to the FPGA for processing with Memtest function. This file is part of both the

HLS TBand theHost TBmemtest_host_fw_tb.cpp: The intermediate listener for socket connections from an end-user application. This file is part only of the

Host TB.test_memtest.cpp: The typical Vivado HLS testbench of Memtest IP, when this is wrapped in a Themisto Shell.

Themisto Shell: The SHELL-ROLE architecture of cF.

cFp_Memtest: The project that bridges Memtest libraries with cF.

Note: Remember to run make clean every time you change those definitions.

5.5.3.2.2.3.2.2.1.1. Memory test modularity¶

Modularity of the memory test:

``src/memtest.cpp` <https://github.com/cloudFPGA/cFp_Vitis/blob/master/ROLE/custom/hls/memtest/src/memtest.cpp>`_ contains the TOP module where you may find the three coarse grained stage: Port&Dst//RX - Processing - TX

``include/memtest.hpp` <https://github.com/cloudFPGA/cFp_Vitis/blob/master/ROLE/custom/hls/memtest/include/memtest.cpp>`_ is the TOP level HEADER with some info on the most basic COMMANDS such as a start/stop for a controllable execution

``include/memtest_library.hpp` <https://github.com/cloudFPGA/cFp_Vitis/blob/master/ROLE/custom/hls/memtest/include/memtest_library.hpp>`_ contains the library for some basic cF components: Port&Dst, RX, TX, Memory R/W utilities, Performance counter utilities

``include/memtest_processing.hpp` <https://github.com/cloudFPGA/cFp_Vitis/blob/master/ROLE/custom/hls/memtest/include/memtest_processing.hpp>`_ contains a template structure of a processing function for the cF environment for a start/stop approach with commands management, processing, and output management. There are example of processing functions for the memory test

``include/memtest_pattern_library.hpp` <https://github.com/cloudFPGA/cFp_Vitis/blob/master/ROLE/custom/hls/memtest/include/memtest_pattern_library.hpp>`_ contains the functions used to developed the custom processing algorithm of the memory test: pattern generator functions, read functions, write functions.

A developer might replace the command handling, and the processing with their own one. To this extent, the modifications are minimal and referred mostly to the processing functions.

5.5.3.2.2.3.2.2.1.2. Run simulation¶

HLS TB

cd ./ROLE/custom/hls/memtest/

make fcsim -j 4 # to run simulation using your system's gcc (with 4 threads)

make csim # to run simulation using Vivado's gcc

make cosim # to run co-simulation using Vivado

Optional steps

cd ./ROLE/custom/hls/memtest/

make callgraph # to run fcsim and then execute the binary in Valgrind's callgraph tool

make kcachegrind # to run callgrah and then view the output in Kcachegrind tool

make memcheck # to run fcsim and then execute the binary in Valgrind's memcheck tool (to inspect memory leaks)

Host TB

# Compile sources

cd ./HOST/custom/memtest/languages/cplusplus

mkdir build && cd build

cmake ../

make -j 2

# Start the intermediate listener

# Usage: ./memtest_host_fwd_tb <Server Port> <optional simulation mode>

./memtest_host_fwd_tb 1234 0

# Start the actual user application on host

# Open another terminal and prepare env

cd cFp_Memtest

source ./env/setenv.sh

./HOST/custom/memtest/languages/cplusplus/build

# Usage: ./memtest_host <Server> <Server Port> <number of address to test> <testing times> <burst size> <optional list/interactive mode (type list or nothing)>

./memtest_host 10.12.200.153 1234 4096 2 512

#interactive mode

# You should expect the output in the stdout and a log in a csv file for both average results and single tests

./memtest_host 10.12.200.153 1234 1 1 1 list

# benchmarking mode, running for a fixed number of times the benchmark from the biggest burst size to the shortest

# on incremental number of addresses

# What happens is that the user application (memtest_host) is sending an input string to

# intermediate listener (memtest_host_fwd_tb) through socket. The latter receives the payload and

# reconstructs the string. Then it is calling the HLS TB by firstly compiling the HLS TB files. The

# opposite data flow is realized for taking the results back and reconstruct the FPGA output string.

# You should expect the string output in the stdout.

5.5.3.2.2.3.2.3. Memtest Synthesis¶

Since curretnly the cFDK supports only Vivado(HLS) 2017.4 we are following a 2-steps synthesis procedure. Firstly we synthesize the Themisto SHELL with Vivado (HLS) 2017.4 and then we synthesize the rest of the project (including P&R and bitgen) with Vivado (HLS) > 2019.1.

By default the Memory Test will come with #define SIMPLER_BANDWIDTH_TEST commented. Which means that you will use the complex memory test that can handle any variable burst size.

To change this just decomment the define.

5.5.3.2.2.3.2.3.1. The Themisto SHELL¶

cd cFp_Vitis/cFDK/SRA/LIB/SHELL/Themisto

make all # with Vivado HLS == 2017.4

5.5.3.2.2.3.2.3.2. The complete cFp_Memtest¶

cd cFp_Vitis

make monolithic # with Vivado HLS >= 2019.1

Optional HLS only for the Memtest IP (e.g. to check synthesizability)

cd cFp_Vitis/ROLE/custom/hls/memtest/

make csynth # with Vivado HLS >= 2019.1

5.5.3.2.2.3.2.4. Memtest cF Demo¶

TODO: Flash a cF FPGA node with the generated bitstream and note down the IP of this FPGA node. e.g. assuming 10.12.200.153 and port 2718

cd ./HOST/custom/memtest/languages/cplusplus/

mkdir build && cd build

cmake ../

make -j 2

# Usage: ./memtest_host <Server> <Server Port> <number of address to test> <testing times> <burst size> <optional list/interactive mode (type list or nothing)>

./memtest_host 10.12.200.153 2718 4096 2 512

#interactive mode

# You should expect the output in the stdout and a log in a csv file for both average results and single tests

./memtest_host 10.12.200.153 2718 1 1 1 list

# benchmarking mode, running for a fixed number of times the benchmark from the biggest burst size to the shortest

# on incremental number of addresses

NOTE: The cFp_Memtest ROLE (FPGA part) is equipped with both the UDP and TCP offload engines. At

runtime, on host, to select one over the other, you simply need to change in config.h

file the define #define NET_TYPE udp (choose either udp or tcp).

5.5.3.2.2.3.2.4.1. JupyterLab example¶

NOT UP TO DATE

cd HOST/langauges/python

mkdir build

cd build

cmake ../

make -j 2

python3 -m venv env # to create a local dev environment

# note you will have a "env" before the prompt to remind

# you the local environment you work in

source env/bin/activate #

pip3 install -r ../requirements.txt # to be improved : some errors might occur depending on environment

5.5.3.2.2.3.2.5. Usefull commands¶

Connect to ZYC2 network through openvpn:

sudo openvpn --config zyc2-vpn-user.ovpn --auth-user-pass up-userConnect to a ZYC2 x86 node:

ssh -Y ubuntu@10.12.2.100On Wireshark filter line:

udp.port==2718ortcp.port==2718Quick bitgen:

sometimes it accelerates the build process of

make monolithicif: execute after a successfull buildmake save_mono_incrand then build the new withmake monolithic_incrormake monolithic_debug_incrDocker:

docker exec -it distracted_ishizaka /bin/bashPossible resources/stack limitation on Linux-based systems:

ulimit -ato see the current limitationsulimit -c unlimitedto unlimit “The maximum size of core files created”ulimit -s unlimitedto unlimit “The maximum stack size.”

5.5.3.2.2.3.2.6. Working with ZYC2¶

All communication goes over the UDP/TCP port 2718. Hence, the CPU should run:

$ ./memtest_host <Server> <Server Port> <input string>

The packets will be send from Host (CPU) Terminal 1 to FPGA and they will be received back in the

same terminal by a single host application using the sendTo() and receiveFrom() socket methods.

For more details, tcpdump -i <interface> -nn -s0 -vv -X port 2718 could be helpful.

The Role can be replicated to many FPGA nodes in order to create a pipline of processing.

Which destination the packets will have is determined by the node_id/node_rank and cluster_size

(VHDL portspiFMC_ROLE_rank and piFMC_ROLE_size).

The **Role can be configured to forward the packet always to (node_rank + 1) % cluster_size**

(for UDP and TCP packets), so this example works also for more or less then two FPGAs, actually.

curretnly, the default example supports one CPU node and one FPGA node.

For distributing the routing tables, **POST /cluster** must be used.

The following depicts an example API call, assuming that the cFp_Memtest bitfile was uploaded as

imaged8471f75-880b-48ff-ac1a-baa89cc3fbc9:

5.5.3.2.2.4. Firewall issues¶

Some firewalls may block network packets if there is not a connection to the remote machine/port. Hence, to get the Triangle example to work, the following commands may be necessary to be executed (as root):

$ firewall-cmd --zone=public --add-port=2718-2750/udp --permanent

$ firewall-cmd --zone=public --add-port=2718-2750/tcp --permanent

$ firewall-cmd --reload

Also, ensure that the network secuirty group settings are updated (e.g. in case of the ZYC2 OpenStack).

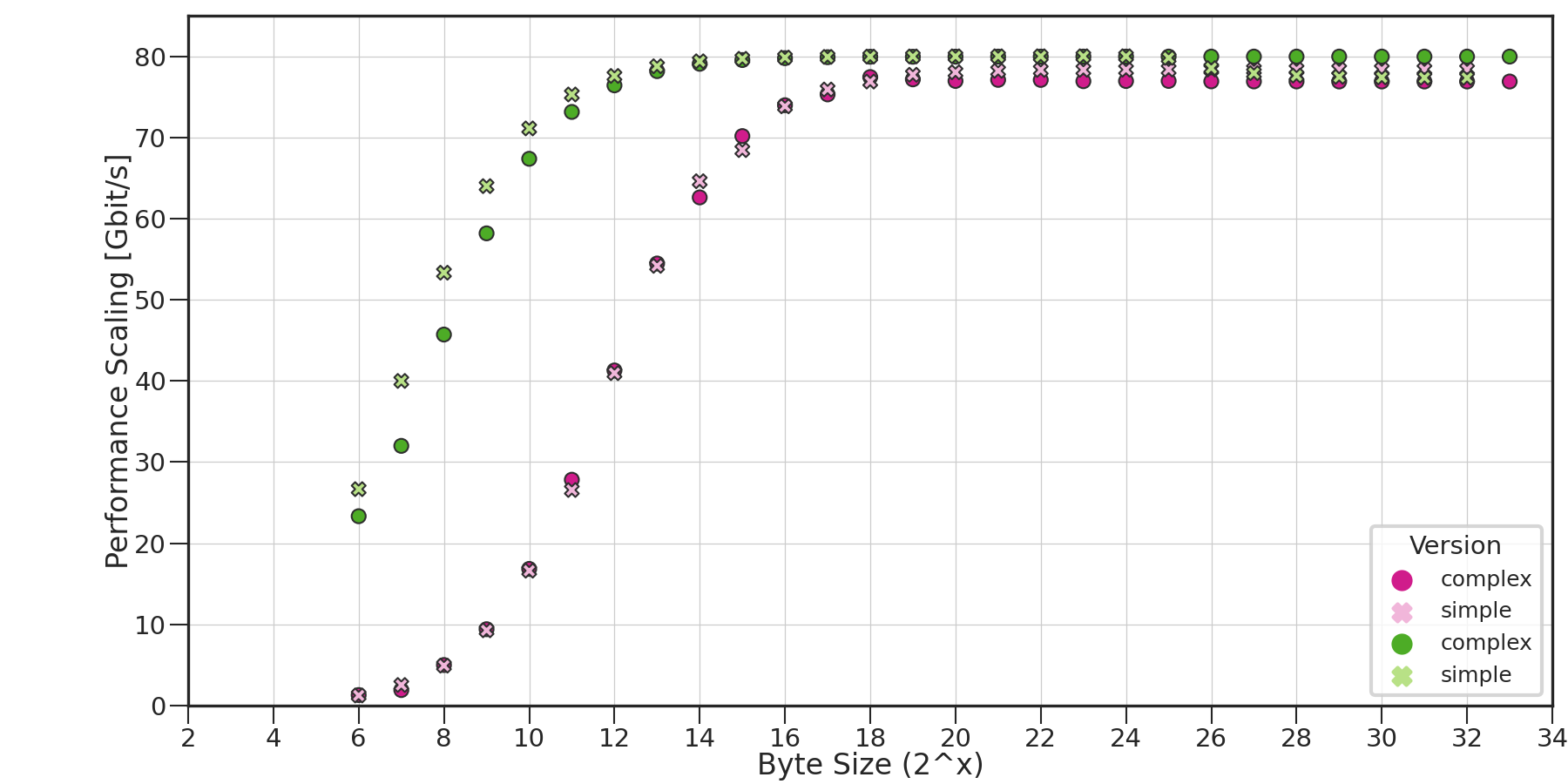

5.5.3.2.2.5. Memory test Results¶

Follows some expected outputs of the memory test in the simple (i.e., free running) and the complex version (i.e., burst controlled). First some analysis of the burst controlled bandwidth for the read and the write. Analyzing power of two memory sizes starting from 64 bytes (i.e., one 512bits word)

5.5.3.2.2.5.1. Bandwidth Burst Control Results¶

Burst: 1 – > 512 (power of two)

5.5.3.2.2.5.2. Bandwidth read and write results comparison simple and complex memory tests (i.e. free-running vs burst cntrlled)¶